Introduction

I’ve been working on an open-source project: NSpM on Question Answering system with DBpedia. As the Interpretor part, which means the translation from a natural language question to a formal query(SPARQL) is considered as a Machine Translation task, so in this blog, I will talk about the three largely used bases of the seq2seq model, RNN, CNN and Transformer.

RNN

The dominant approach in NLP, which are still very popular to date, is to use a series of bi-directional recurrent neural networks (RNN) to encode the input sequence and to generate(decode) a squence of output. This recurrent approach matches the natual way of our brain to read one word after another.

RNN (bi-LSTM) is currently used as our based NMT model for NSpM. RNN is one of the dominant approach used in NLP, it uses a series of recurrent of neural units to encode the input sequence into a context vector.

Soltions of handling long sequences

The main constraint for a recurrent model is its capacity of handling long sequences. I will present briefly two principle solutions to address the problem.

Attention

As the use of a fixed-length context vector is problematic for translating long sentences, the attention mechanism is introduced, which dynamically calculate the context vector at the phase of decoding based on all the hidden states.

LSTM

While the basic version of RNN model struggles with long sequence(gradient vanish and long dependency), a variant version, LSTM, could handle this very well. It maintains a cell state which runs through all along the sequence. It has the Gate mechanism to determine which information in the cell state to forget and which new information from the current state to remember.

Conclusion on RNN

RNN is the natual way we treat a sentence, from left to right(or in contrast), one word after another.But also due to this character, RNN is restricted in parallelization of computation, because one state is obliged to wait for the computation of its previous state. And it is still constrained to handle super-long sequences(length > 200).

CNN

Convolutional neural networks are less common for sequence modeling than RNN. It takes the meaning of a word by aggregating the local information from its neighbors by convolution operations.

Comparison with RNN

Compared to recurrent networks, the CNN approach allows to discover compositional structure in the sequences more easily since representations are built hierarchically. Convolutional networks do not depend on the computations of the previous time step. Consequently, this allows parallelization over every element in a sequence and has a shorter path to capture long-range dependencies.

Some tricks used in CNN model

In this part, we focus on the model of fairseq[1].

Position Embeddings

As the convolution operation and pooling operation in CNN will affect the ability to learn position information, fairseq added position embeddings to each word with an addictive operation.

Gated Linear Unit

This model uses also a gated linear unit to introduce the relevance information of the current context for each input word.

Multi-step Attention

Same to the RNN-based model, CNN model also introduces the attention mechanism for decoder. As there are multiple convolutional layer in decoder, this attention mechanism is proceeded in each layer.

Conclusion on CNN

The Seq2Seq model based entirely on CNN can be implemented in parallel, and this reduces a lot of time on training in comparison with RNN and could have a SOTA performance. But it occupies a lot of memory, we could also see that it needs many tricks to ensure the last performance, and most importantly, the parameter adjustment on large data volumes is not easy with so many tricks. In plus, it need a really deep stack of convolution block to handle long dependency, for example, a stacking 6 blocks with kernel_size = 5 results in an input field of 25 elements, i.e. each output depends on 25 words.

Transformer

So here comes the model Transformer, which is really a breakthrough in NLP. It introduces a novel network architecture other than RNN or CNN. It enables the parallelization and meanwhile has a better performance.

The New Model

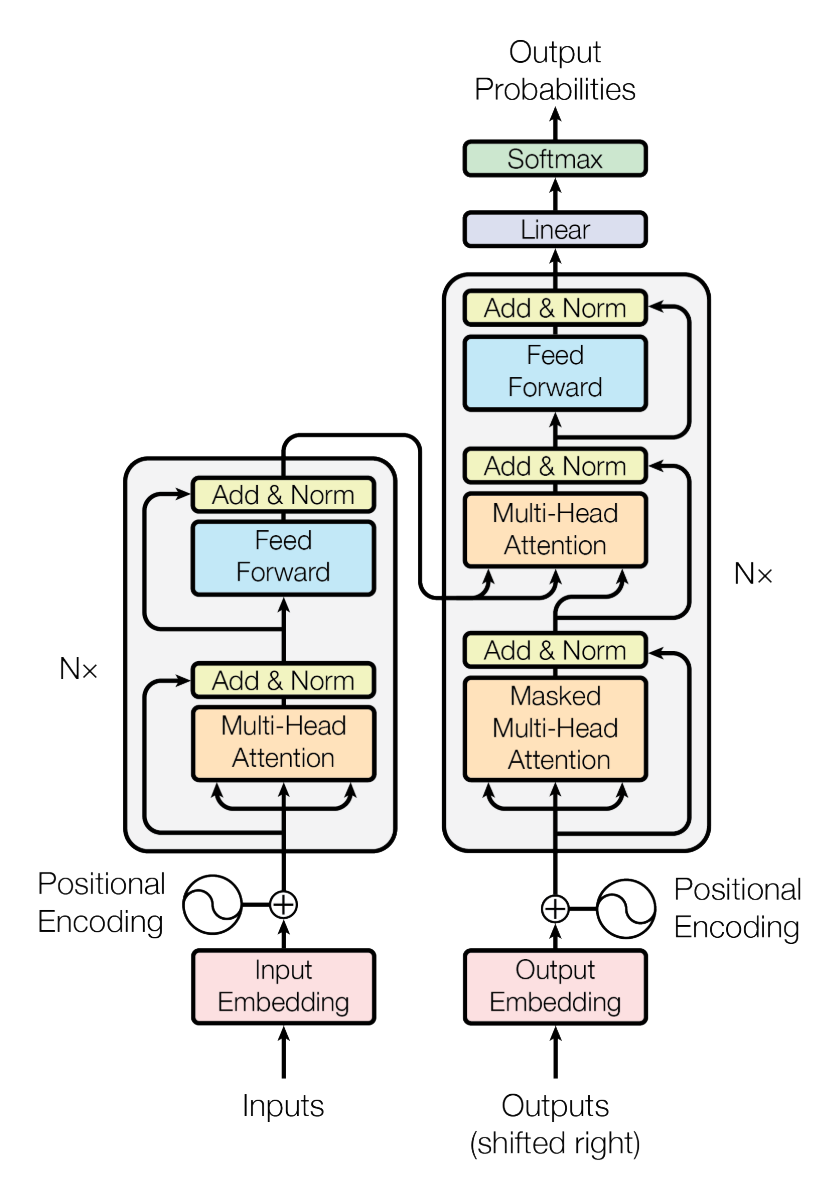

Figure1: Model Architecture of Transformer[2]

Figure1: Model Architecture of Transformer[2]

Each layer of Encoder has two sub-layers: a multi-head self attention layer and a feed forward layer. Each layer of Decoder has three sub-layers: in addition to the two sub-layers in Encoder, the Decoder inserts a third sub-layer, which performs multi-head attention over the output of the last encoder stack.

Self Attention

The new attention mechanism which is calculated as below:

This mechanism is called self-attention because it does not calculate the relevance between a hidden state at the phase of Decoder and Encoder, in contrast, it calculates only the relevance among the inputs or the outputs, that’s why it is called as “self” attention.

Conclusion on Transformer

Transformer is a breakthrough in the domain of MT and could be used in many other domain of NLP. It enable the parallelization and improves the performance of attention mechanism by introducing self-attention. Although there is still a limit of handling hyper-long sequences(length > 512, this limit is usually encountered when we treat Language modeling as a character-level task[3]), we have already and will variation of Transformer which could address this kind of limit and improve its capacity.

Conclusion of the three models

Although Transformer is proved as the best model to handle really long sequences, the RNN and CNN based model could still work very well or even better than Transformer in the short-sequences task. Like what is proposed in the paper of Xiaoyu et al.(2019)[4], a CNN based model could outperforms all other models on KGQA task with the metrics of BLEU and accuracy.

In the next few months, we would also do the same experiments on our own NL2SPARQL task and our own data-sets, so let’s see which model would be dominant.

References

[1] Jonas Gehring, Michael Auli et al. Convolutional Sequence to Sequence Learning arXiv:1705.03122v3

[2] Ashish Vaswani, Noam Shazeer et al. Attention Is All You Need arXiv:1706.03762v5

[3] Al-Rfou et al. 2018. Character-level lan- guage modeling with deeper self-attention.

[4] Xiaoyu Yin, Dagmar Gromann, and Sebastian Rudolph, Neural Machine Translating from Natural Language to SPARQL